The below is written in the hope it will be helpful for my fellow DDs.

Why using build after push ?

Simple answer: to save time, to always use a clean build environment, to automate more tests.

Real answer: because you are lazy, and tired of always having to type these build commands, and that watching the IRC channel is more fun than watching the build process.

Other less important answers: building takes some CPU time, and makes your computer run slower for other tasks. It is really nice that building doesn t consume CPU cycles on your workstation/laptop, and that a server does the work, not disturbing you while you are working. It is also super nice that it can maintain a Debian repository for you after a successful build, available for everyone to use and test, which would be harder to achieve on your work machine (which may be behind a router doing NAT, or even sometimes turned off, etc.). It s also kind of fun to have an IRC robot telling everyone when a build is successful, so that you don t have to tell them, they can see it and start testing your work.

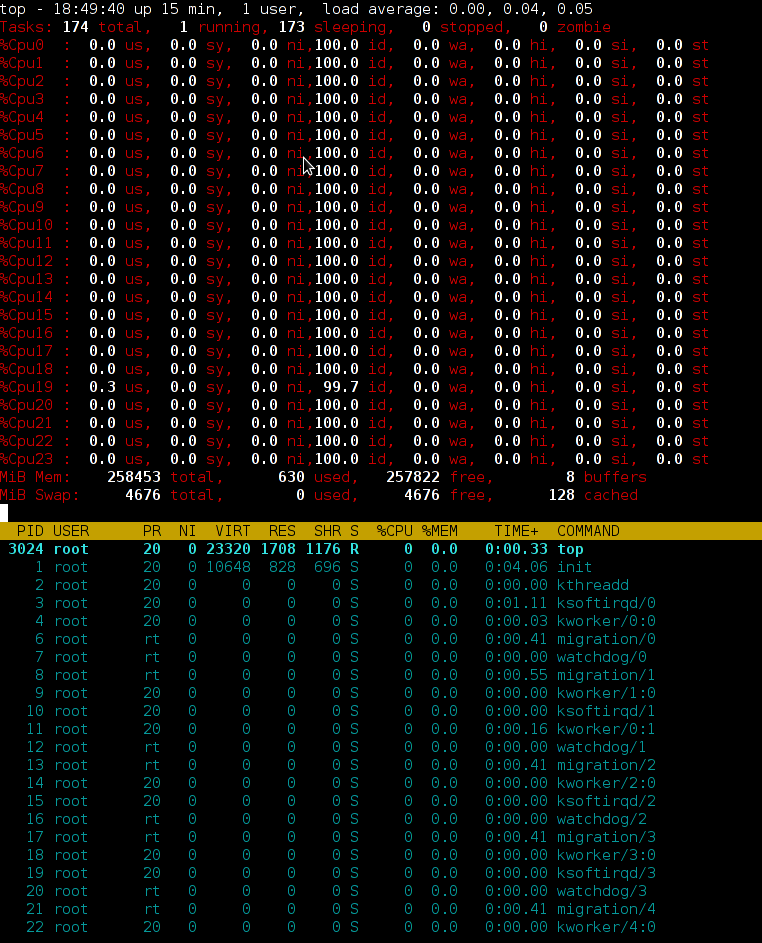

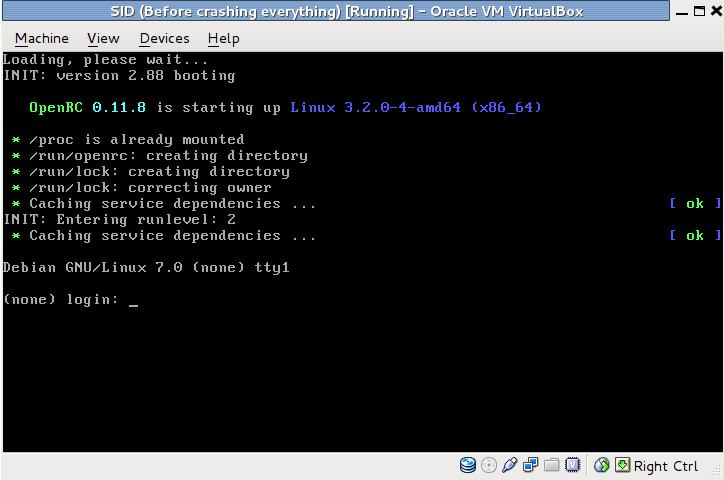

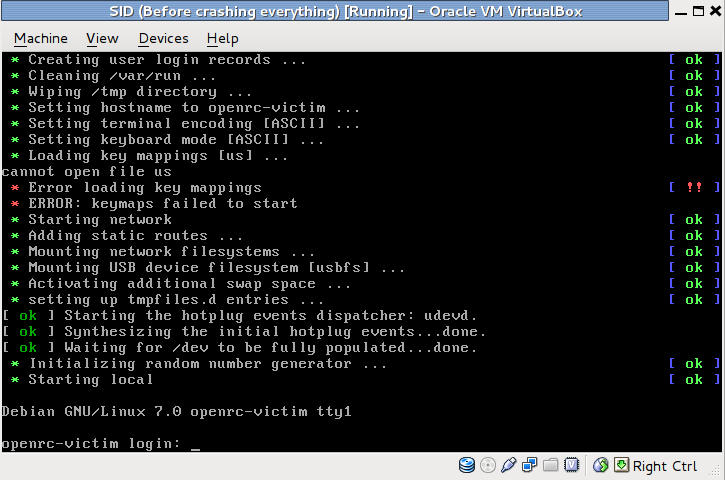

Install a SID box that can build with cowbuilder

- Setup a SID machine / server.

- Install a build environment with git-buildpackage, pbuilder and cowbuilder (apt-get install all of these).

- Initialize your cowbuilder with: cowbuilder create.

- Make sure that, outside of your chroot, you can do ./debian/rules clean for all of your packages, because that will be called before moving to the cowbuilder chroot. This means you have to install all the build-dependencies involved in the clean process of your packages outside the base.cow of cowbuilder as well. In my case, this means apt-get install openstack-pkg-tools python-setuptools python3-setuptools debhelper po-debconf python-setuptools-git . This part is the most boring one, but remember you can solve these problems when you see them (no need to worry too much until you see a build error).

- Edit /etc/git-buildpackage/gbp.conf, and make sure that under [DEFAULT] you have a line showing builder=git-pbuilder, so that cowbuilder is used by default in the system when using git-buildpackage (and therefore, by Jenkins as well).

Install Jenkins

WARNING: Probably, before really installing, you should read what s bellow (eg: Securing Jenkins).

Simply apt-get install jenkins from experimental (the SID version has some security issues, and has been removed from Wheezy, on a request of the maintainer).

Normally, after installing jenkins, you can access it through:

http://<ip-of-your-server>:8080/

There is no auth by default, so anyone will be able to access your jenkins web GUI and start any script under the jenkins user (sic!).

Jenkins auth

Before doing anything else, you have to enable Jenkins auth, otherwise, you have everything accessible from the outside, meaning that more or less, anyone browsing your jenkins server could be allowed to run any command. It might sound simple, but in fact, Jenkins auth is tricky to activate, and it is very easy to get yourself locked out, with no working web access. So here s the steps:

1. Click on Manage Jenkins then on Configure system

2. Check the enable security checkbox

3. Under security realm select Jenkins s own user database and leave allow users to sign up . Important: leave Anyone can do anything for the moment (otherwise, you will lock yourself out).

5. At the bottom of the screen, click on the SAVE button.

6. On the top right, click to login / create an account. Create yourself an account, and stay logged in.

7. Once logged-in, go back again in the Manage Jenkins -> Configure system , under security.

8. Switch to Project based matrix authentication strategy . Under User/group to add , enter the new login you ve just created, and click on Add .

9. Select absolutely all checkboxes for that user, so that you make yourself an administrator.

10. For the Anonymous user, for Job, check Read, Build and Workspace. For Overall select Read.

11. At the bottom of the screen, hit save again.

Now, anonymous (eg: not logged-in) users should be able to see all projects, and be able to click on the build now button. Note that if you lock yourself out, the way to fix is to turn off Jenkins, edit config.xml, remove the useSecurity thing, and all what is in authorizationStragegy and securityRealm , then restart Jenkins. I had to do that multiple times until I had it right (as it isn t really obvious you got to leave Jenkins completely insecure when creating a new user).

Securing Jenkins: Proxy Jenkins through apache to use it over SSL

When doing a quick $search-engine search, you will see lots of tutorials to use apache as a proxy, which seems to be the standard way to run Jenkins. Add the following to /etc/apache2/sites-available/default-ssl:

ProxyPass / http://localhost:8080/

ProxyPassReverse / http://localhost:8080/

ProxyRequests Off

Then perform the following commands on the shell:

htpasswd -c /etc/apache2/jenkins_htpasswd <your-jenkins-username>

a2enmod proxy

a2enmod proxy_http

a2enmod ssl

a2ensite default-ssl

a2dissite default

apache2ctl restart

Then disable access to the port 8080 of jenkins from outside:

iptables -I INPUT -d <ip-of-your-server> -p tcp --dport 8080 -j REJECT

Of course, this doesn t mean you shouldn t take the steps to activate Jenkins own authentication, which is disabled by default (sic!).

Build a script to build packages in a cowbuilder

I thought it was hard. In fact it was not. All together, this was kind of fun to hack. Yes hack. What I did yet another kind of 10km ugly shell script. The way to use it is simply: build-openstack-pkg <package-name>. On my build server, I have put that script in /usr/bin, so that it is accessible from the default path.Ugly, but it does the job!

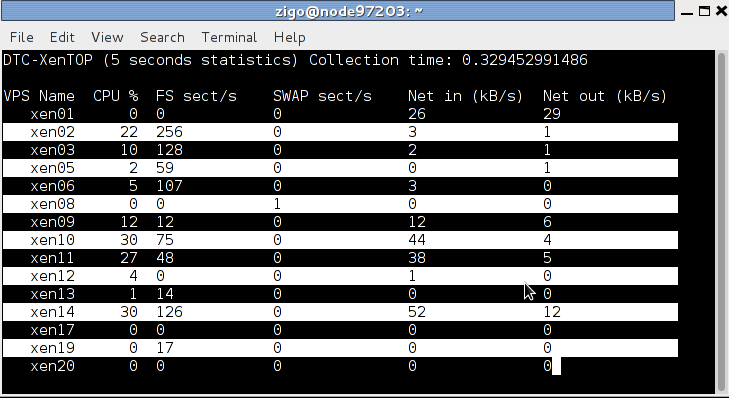

Jenkins build script for openstack

At the end of the script, scan_repo() generates the necessary files for a Debian repository to work under /home/ftp. I use pure-ftpd to serve it. /home/ftp must be owned by jenkins:jenkins so that the build script can copy packages in it.

This build script is by no mean state of the art, and in fact, it s quite hack-ish (so I m not particularly proud of it, but it does its job ). If I am showing it in this blog post, it is just to show an example of what can be done. It is left as an exercise to the reader to create another build script adapted to its own needs, and write something cleaner and more modular.

Dependency building

Let s say that you are using the Built-Using: field, and that package B needs to be built if package A changes. Well, Jenkins can be configured to do that. Simply edit the configuration of project B (you will find it, it s easy ).

My use case: In my case, for building Glance, Heat, Keystone, Nova, Quantum, Cinder and Ceilometer, which are all components of Openstack, I have written a small (about 500 lines) library of shell functions, and an also small (90 lines) Makefile, which are packaged in openstack-pkg-tools (so Nova, Glance, etc. all build-depends on openstack-pkg-tools). The shell functions are included in each maintainer scripts (debian/*.config and debian/*.postinst mainly) to avoid having some pre-depends that would break debconf flow. The Makefile of openstack-pkg-tools is included in debian/rules of each packages.

In such a case, trying to manage the build process by hand is boring and time consuming (spending your time watching the build process of package A, so that you can manually start the build of package B, then wait again ). But it is also error prone: it is easy to do a mistakes in the build order, you can forget to dpkg -i the new version of package A, etc.

But that s not it. Probably at some point, you will want Jenkins to rebuild everything. Well, that s easy to do. Simply create a dummy project, and have other project to build after that one. The build steps could simply be: echo Dummy project as a shell script (I m not even sure that s needed ).

Configuring git to start a build on each push

In Jenkins, pass your mouse over the Build now URL. Well, we just need to wget that URL in your Alioth repository. A small drawing is better than long explanation:

for i in ls /git/openstack ; do

echo "wget -q --no-check-certificate \

https://<ip-of-your-server>/job/$ PROJ_NAME /build?delay=0sec \

-O /dev/null" >/git/openstack/$ i /hooks/post-receive \

&& chmod 0770 /git/openstack/$ i /hooks/post-receive;

done

The chmod 0770 is necessary if you don t want every Alioth users to have access to your Jenkins server web interface and see an eventual htpassword protection that you can add to your jenkins box (I m not covering that, but it is fairly easy to add such protection). Note that all of the members of your Alioth group will then have access to this post-receive hook, containing the password of your htaccess, so you must trust everyone in your Alioth group to not do nasty things with your Jenkins.

Bonus point: IRC robot

If you would like to see the result of your build published on IRC, Jenkins can do that. Click on Manage Jenkins , then on Manage Plugins . Then click on available and check the box in front of IRC plugin . Go at the bottom of the screen and click on Add . Then check the box to restart Jenkins automatically. Once it is restarted, go again under Manage jenkins then Configure system . Select the IRC Notification and configure it to join the network and the channel you want. Click on Advanced to select the IRC nick name of your bot, and make sure you change the port (by default jenkins has 194, when IRC normally uses 6667). Be patient when waiting for the IRC robot to connect / disconnect, this can take some time.

Now, for each jenkins Job, you can tick the IRC Notification option.

Doing piuparts after build

One nice thing with automated builds, is that most of the time, you don t need to wait starring at them. So you can add as many tests as you want, the Jenkins IRC robot will anyway let you know sooner or later the result of your build. So adding piuparts tests in the build script seems the correct thing to do. Though that is still on my todo, so maybe that will be for my next blog post.

OpenStack upstream was released today. Thanks to the release team and a big up to TTX for his work.

By the time you read this, probably all of my uploads have reached your local Debian mirror.

Please try Havana using either Sid from any Debian mirror, or using the Wheezy backports available here:

deb http://havana.pkgs.enovance.com/debian havana main

OpenStack upstream was released today. Thanks to the release team and a big up to TTX for his work.

By the time you read this, probably all of my uploads have reached your local Debian mirror.

Please try Havana using either Sid from any Debian mirror, or using the Wheezy backports available here:

deb http://havana.pkgs.enovance.com/debian havana main